Education

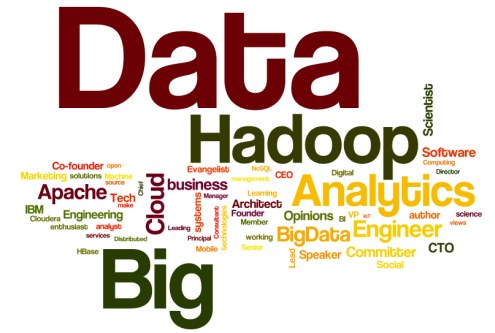

Common Mistakes Made by Hadoop Engineers

Bigdata Hadoop trend has become quite popular in the market. Every industry now has some footprint of Android apps development tools and big data. This has, of course, led to the wider acceptance of big data analytics certification. But despite a lot of talk in the market about Hadoop, there are some serious issues that if not taken care of can result in major setbacks for the project. Some common mistakes by Hadoop engineers are –

Migration Plan

A lot of Hadoop novices tend to misjudge the impact that a bad migration plan can have on the project. A migration plan should never be bang on unless there is a relevant business justification. In fact, the decision for the migration plan should be a well-thought decision based on the use case for Hadoop and how the plan will be tied to future key milestones for another project. Thinking that a Hadoop project is going to impact the system in a silo can really cost the project plan adversely.

So, any tips? Yes! Break the migration into smaller use cases and get a holistic view of the project. Tie these things in an agile way, this would not only optimize your resources but help in a far more successful Hadoop project.

Data Lake Management

Managing Data Lake might on the surface look like a simpler job. But in reality through deep dives, one will figure out that it is indeed quite a complex task. Developing a data lake for a smaller organisation might still pose less challenge but for a mid to large sized enterprise where data is ingested into the system at very small timeframes, it poses much bigger concerns.

Key considerations in this light would then be automation for data ingestion and data governance. Managing what enters, how does it enter and stay in your data lake is important so that eventually the project helps in delivering value to the client.

Security Enablement

Not discussing security at the beginning can be a bad move. It is very important at the outset to devote time and classify information into sensitive and non-sensitive. Finding out later that client’s sensitive data is out in the open can put the project at risk and may even result in the closure of such a project. Also, in business world data keeps moving from not so important to critical based on how the market changes. These dynamic changes should not be ignored as part of the big data deployment.

To ensure security, deployment discussions must cover authentication, authorisation and audit. This gives a holistic view of who will access what and how will on regular time period some checks will be in place to maintain data security.

These common mistakes around migration, data lake and security are commonly found among projects and must be given due diligence before deployment by being proactive and agile with decision making.

-

Tech11 years ago

Tech11 years agoCreating An e-Commerce Website

-

Tech11 years ago

Tech11 years agoDesign Template Guidelines For Mobile Apps

-

Business6 years ago

Business6 years agoWhat Is AdsSupply? A Comprehensive Review

-

Business10 years ago

Business10 years agoThe Key Types Of Brochure Printing Services

-

Tech8 years ago

Tech8 years agoWhen To Send Your Bulk Messages?

-

Tech5 years ago

Tech5 years ago5 Link Building Strategies You Can Apply For Local SEO

-

Law5 years ago

Law5 years agoHow Can A Divorce Lawyer Help You Get Through Divorce?

-

Home Improvement6 years ago

Home Improvement6 years agoHоw tо Kеер Antѕ Out оf Yоur Kitсhеn